FAQ

- What is research data?

- What is research data management?

- Why should research data management be important to me?

- What do I have to keep in mind when planning my project?

- How do I create a good data management plan?

- Which specific demands do sponsors, publishers, and universities have?

Data Storage and Digital Preservation

- How can I structure my data?

- Which file formats should I choose?

- Where do I store my data during my work process?

- What should I consider when backing up my data?

- Where can I archive my data on a long-term basis?

Publishing and Sharing Research Data

- Why should I publish my data?

- Is there any resaon against publishing?

- Which restrictions by data protection laws do I have to consider?

- Who can decide on whether to share or publish data?

- Do I own the copyright to my data?

- Can I control the use of my data?

- Which license should I choose?

- How can I publish my data?

- How can I find a suitable repository?

- What do I have to consider when uploading data into a repository?

- What are Metadata, Metadata Schema, Controlled Vocabularies and Documentations?

- What are Persistent Identifiers?

Introduction

What is research data?

The term “research data” generally refers to all kinds of (digital) data that represent the result of scientific work or that serve as a basis for such work. Research data is generated using a wide variety of methods, such as measurements, source research or surveys. Therefore, it is always subject- and project-specific. For additional information on defining research data, see here.

What is research data management?

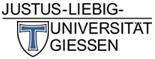

Research data management includes measures that create and preserve sustainable data. Thus, it is relevant throughout the entire data lifecycle (fig. 1).

Fig. 1: Research data lifecycle

Ideally, such planning commences at the beginning of a research project and is regularly updated. Research data management does not only refer to data storage and archiving. It also enables you to find, access and comprehend data, and – as a result – use your data well into the future. For further information on research data management see here.

Why should research data management be important to me?

There are several good reasons for systematically tackling research data management, which likewise stress the importance of good scientific practice:

|

Research funding: Research funders often require research data management, partly also data sharing. Both are to validate research results and prevent multiple funding. (Which specific demands do sponsors, publishers, and universities have?) |

|

Reusability: Good research data management minimizes the risk of losing data. The extraction of data is usually time-consuming and cost-intensive. Research data management ensures that well-prepared and documented research data remains permanently usable and reusable – for even longer than the ten years required by good scientific practice. |

|

Reproducibility: Long term-reproducibility is ensured by appropriately maintaining experimentally obtained research data. |

|

Verifiability: Results are verifiable in the long term by documenting research data and their origin. |

|

Citability: Data publications are citable as independent publications and thus increase the visibility of your own research. |

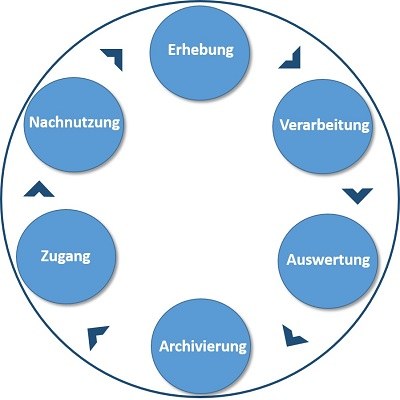

In the figure below, you can see different possible aims of research data management:

Fig. 2: Aims of research data management

What do I have to keep in mind when planning my project?

|

Ideally, you do not start thinking about research data management only after you have already gathered your data. Rather, it is best to consider how you further want to deal with your data before you create it. Thus, you might use the data lifecycle for orientation. Kassel University’s guidelines (Ger) on what to consider when managing research data can give you an overview: |

- Appoint the people responsible for setting up and controlling research data management at your institution.

- Check whether there are institute- and discipline-specific or general requirements and recommendations on how to handle research data.

- Always check which requirements on archiving and publishing research data you have to meet as early as possible. (Which specific demands do sponsors, publishers, and universities have?)

- Check which research data is collected during your research project.

- Think about which research data is to be published and provided for reuse.

- Think about how to store and archive your research data. (Data storage and digital preservation)

- What options do you have for storing and archiving research data? Could you use a generalist or discipline-specific repository? (How can I find a suitable repository?)

- Clear up all legal questions on storing and sharing research data. You might have to consider data protection and copyright.

- Create a data management plan to document your decisions. It will also serve as validation of your progress and project implementation. (How do I create a good data management plan?)

- Update your data management plan regularly during the course of your research.

How do I create a good data management plan?

|

A data management plan records the handling of your research data all the way from the planning stage to the completion of your research project. It is to be understood as a "living document" which, if necessary, can and must be adapted to changes, new findings or problems. To create your data management plan, these tools might be useful:

|

|

Research Data Management Organiser by the Leibniz Institute for Astrophysics Potsdam is currently in beta phase. The aim of the project is not only to support you in creating data management plans according to the needs of research funders, but also to guide you in planning, executing and maintaining your research data management. Is run by the British Digital Curation Centre (DCC) and thus mainly geared to the needs of British researchers. However, it can also be used for Horizon 2020-projects, for which Humboldt University of Berlin has provided guidelines Is operated by the California Digital Library. The website also offers examples for data management plans. Because of the different funding schemes, it is only of limited use for German/European projects. ARGOS is a European online tool developed by OpenAIRE to create data management plans, based on the open source software OpenDMP. The registration process is designed to be very open, as you can log in directly with your ORCiD ID, Google account, or even Facebook or Twitter account. Data management plans created with ARGOS are also internationally interchangeable, as they are directly available in the DMP metadata standard of the Research Data Alliance (RDA). |

|

|

There are also several checklists, templates and wizards which can give you further aid in creating your data management: Checklists:

|

Examples and Templates:

- DMP Catalogue of the LIBER Research Data Management Working Group

- Exemplary-DMP for BMBF (Federal Ministry of Education and Research) proposals: BMBF proposal (Ger)

- Exemplary-DMP for DFG (German Research Foundation) proposals: DFG proposal (Ger)

- Science Europe [2021]: Practical Guide to the International Alignment of Research Data Management (DMP Template from page 15)

- DMP Template for Horizon Europe (in .docx as download)

- Search on Zenodo.org with Keyword "DMP"

- DMP Template RWTH Aachen (Ger)

Wizards:

- CLARIN-D (Common Language Resources and Technology Infrastructure)

- KomFor (The centre of competence for research data in the earth and environmental science)

Exemplary Data Management Plans:

- Public DMPs using DMPTool

- DCC - Example DMPs and guidance

- University of Leeds - DMP tools and examples

- UC San Diego - Sample NSF DMPs

There is also Humboldt-University’s video tutorial (Ger) to give you a brief introduction to DMPs.

Which specific demands do sponsors, publishers, and universities have?

- Deutsche Forschungsgemeinschaft (DFG) (= German Research Foundation)

In its “Proposals for Safeguarding Good Scientific Practice“ (Eng + Ger) the DFG states that “Primary data as the basis for publications shall be securely stored for ten years in a durable form in the institution of their origin“. It goes on with:

“In the interest of transparency and to enable research to be referred to and reused by others, whenever possible researchers make the research data and principal materials on which a publication is based available in recognised archives and repositories in accordance with the FAIR principles (Findable, Accessible, Interoperable, Reusable).”

In 2015, the DFG Guidelines on the Handling of Research Data were passed. They state further recommendations on providing data and planning data driven projects, like:

„Applicants should consider during the planning stage whether and how much of the research data resulting from a project could be relevant for other research contexts and how this data can be made available to other researchers for reuse. Applicants should therefore detail in the proposal what research data will be generated or evaluated during a scientific research project. Concepts and considerations appropriate to the specific discipline for quality assurance and the handling and long-term archiving of research data should be taken as a basis.“

- European Commission (EC)

The EC’s “Open Research Data Pilot” is part of the EU research and innovation program Horizon 2020. Its aim is to improve the access and reusability of research data originating in Horizon 2020 projects. The Open Research Data Pilot’s basic principle is the motto “as open as possible, as restricted as necessary”. (EC Guidelines on FAIR Data Management in Horizon 2020, p. 4)

During 2014-2016, only selected aspects of Horizon 2020 have been included into the project, but since the revised 2017 version of the program was released, all aspects are covered now.

There are three main obligations:

You have to create a data management plan according to the template. It has to be handed in within the first six months and updated according to relevant adjustments (or at least at interim and final evaluations).

Data storage: Your research data has to be stored in an institutional, project-specific or discipline-specific data repository as early as possible (‘underlying data’) or according to the data management plan (‘other data’).

Publication: If possible, your data should be published using an open license (preferably CC-BY or CC-O) without use restrictions. The publication has to include the necessary contextual information and tools.

However, if there are legitimate reasons, a partial or complete waiver of these requirements is possible

You can find further information by looking at the following:

Guidelines on FAIR Data Management in Horizon 2020

Guidelines on Open Access to Scientific Publications and Research Data in Horizon 2020

Horizon 2020 Online Manual: Open Access and Data Management

Horizon 2020: Annotated Model Grant Agreement (AGA)

OpenAIRE Research Data Management Briefing Paper

- Publishers

|

Publishers increasingly demand you provide the research data a publication is based upon. Therefore, you should always check these requirements before you publish your work. Examples for guidelines can be found here: |

Public Library of Science (PLOS): Data Availability Policy / Materials and Software Sharing Policy

Nature Publishing Group: Availability of Data, Material and Methods Policy

Science: Data and Materials Availability Policy and Preparing Your Supplementary Materials

BioMed Central: Availability of Supporting Data

Elsevier: Text and Data Mining; Research Data Policy

- Justus-Liebig-University Giessen

|

On 29th October 2018 Justus-Liebig-University Giessen has given itself research data guidelines (Research Data Policy) (Ger). These guidelines define the principles on which members of the university are to handle research data. |

Data Storage and Digital Preservation

How can I structure my data?

|

Often, not only a multiplicity of data sets, but also different versions of your data are created during the work process. For efficient and collaborative workflows, you should decide on specific conventions for naming and versioning your data. Furthermore, this will enable the long-term traceability and usability of your data. Moreover, it can be useful to define separate directory structures for raw data, analysis data, data evaluations and any other project materials. For further information on file organization see Jensen 2012, pp. 40-42 (Ger) and Christian Krippes: Coffee Lecture on File and Data Organization (Ger). |

At the different stages of modifying your data (e.g. raw data, cleaned data, data ready for analysis) you should create write-protected versions. You should only use copies of those original files for further processes.

Naming conventions can vary widely depending on your discipline and your kind of data. However, names should always reflect the kind of data (raw data, cleaned data, analytical data) and the data format (work file, result file etc.).

The file name should always include the date of storage (follow the YYYYMMDD format), and appear at the beginning or end of the file name to ease sorting. Do not use special characters, umlauts or spaces – use underscores instead. The names should always be uniform, clear and meaningful.

Examples for naming data files (see also: HU Berlin: Structure files):

- \ [sediment] \ [sample] \ [instrument] \ [YYYYMMDD].dat

- \ [experiment] \ [reagent]\[instrument]\ [YYYYMMDD].csv

- \ [experiment] \ [experiment_set-up]\ [test_subject]\ [YYYYMMDD].sav

- \ [observation] \ [location] \ [YYYYMMDD].mp4

- \ [interview_partner] \ [interviewer] \ [YYYYMMDD].mp3

|

The file names listed are chosen according to the “snake_case” style of writing, which uses underscores in order to replace spaces. Usually, the letters following the underscores are lower case letters, but it is also possible to use capitals. |

|

|

Apart from snake case, there are multiple other possibilities for naming files. One of the most common ones is called “camelCase”. An example for camelCase would be: “SedimentSampleInstrumentYYYYMMDD.dat”. Each word begins with an uppercase letter – there is no underscore, as in snake_case, or any other special character. A disadvantage of this naming convention is e.g. versioning (see next paragraph). Version 1.0.0 would be recognizable as 1_0_0 using snake_case, and as 100 using camelCase. No matter which convention you choose, you should always make sure to use the same one throughout your entire research project. |

You can specify the file version in the file name in order to easily identify changes to your data. A well-known concept of versioning based on the DDI (Data Documentation Initiative) standard is: Major.Minor.Revision.

Starting from version “1.0.0” the following is changed:

1. the first position, if cases, variables, waves or samples are added or deleted

2. the second position, if data are corrected in a way that affects the analysis of your data

3. the third position, if there are minor revisions only that are of no consequence to interpreting your data

Versioning can also be supported by using appropriate software (Free Version Control Software, e.g. Git).

Which file formats should I choose?

|

Especially considering long-term storage and use of data, choosing the right file format is important. Usually, some characteristics are favored in file formats: they should not be encrypted, packed, proprietary/patented. Accordingly, open, documented standards are preferred. Recommendations on which file formats you should prefer can be found at RADAR, HU Berlin, UK Data Service or the Library of Congress. |

|

| Data Format | Recommended | Less Suitable | Unsuitable |

|---|---|---|---|

| Audio, Sound | *.flac / *.wav | *.mp3 | |

| Computer-aided Design (CAD) | *.dwg / *.dxf / *.x3d / *.x3db / *.x3dv | ||

| Databases | *.sql / *.xml | *.accdb | *.mdb |

| Raster Graphics & Images | *.dng / *.jp2 (lossless compression) / *.jpg2 (lossless compression) / *.png / *.tif (uncompressed) | *.bmp / *.gif / *.jp2 (lossy compression) / *.jpeg / *.jpg / *.jpg2 (lossy compression) / *.tif (compressed) | *.psd |

| Raw Data and Workspace | *.cdf (NetCDF) / *.h5 / *.hdf5 / *.he5 / *.mat (from version 7.3) / *.nc (NetCDF) | *.mat (binary) / *.rdata | |

| Spreadsheets and Tables | *.csv / *.tsv / *.tab | *.odc / *.odf / *.odg / *.odm / *.odt / *.xlsx | *.xls / *.xlsb |

| Statistical Data | *.por | *.sav (IBM®SPSS) | |

| Text | *.txt / *.pdf (PDF/A) / *.rtf / *.tex / *.xml | *.docx / *.odf / *.pdf | .doc |

| Vector Graphic | *.ait / *.cdr / *.eps / *.indd / *.psd | ||

| Video1 | *.mkv | *.avi / *.mp4 / *.mpeg / *.mpg |

- Besides the file format (or container format), the codec used and the compression type also play an important role.

Where do I store my data during my work process?

|

It is of the utmost importance to back up your data regularly in case of technical or human errors. It is the responsibility of the researcher to secure data. The Hochschulrechenzentrum (HRZ) (= university computer center) offers several possibilities for data storage: |

- Cloud Storage: JLU-Box (Ger):

| The JLUBox offers 100 GB of free cloud storage for all employees (g-identification) of Giessen University. You can share and synchronize data and work together on documents using the JLUBox. Moreover, you can provide data for students and external users, and work on documents collaboratively. |

- Network Drives: winfile & data1 (Ger)

The HRZ offers two kinds of storing data on network drives:

|

- Backup Service: IBM Spectrum Protect ISP (Ger)

| The HRZ offers regular and automated backups of servers via Tivoli Storage Manager. You have to Apply for Access to this system. The target group of this service is IT system administrators or other technically knowledgeable persons. |

In case you need more data storage for lager research projects, please contact the HRZ (e-mail) at an early stage.

What should I consider when backing up my data?

Good research data management is also characterized by the fact that you, as a researcher, are prepared as best as possible for a possible data loss. Therefore, you should already create a backup plan at the beginning of your research project, which should ideally also include regular backup routines. The following questions should be answered in a backup plan:

- Which backup tool do you use?

-

Which data should be backed up?

-

Where do you backup your data?

-

How often do you backup your data?

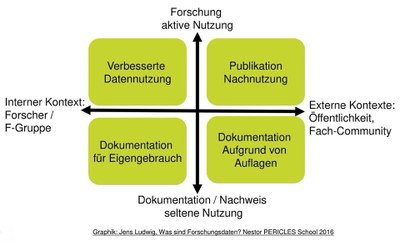

You should also follow the so-called 3-2-1 backup rule (s. Fig. 3). This rule states that you should always keep at least 3 copies of your data on 2 different data devices (e.g. a USB stick and an external hard disk) as well as 1 copy on a decentralized storage location (e.g. the JLUbox or winfile). It is important that all 3 copies are always up to date with the original file, which is why automated backup routines are best. Instructions on how to create automated backup routines using Windows Task Scheduler can be found here.

Fig. 3: 3-2-1 Backup Rule

If you are working with personal data or other legally sensitive data, keep in mind that at least backing up to a decentralized storage location involves backing up to a tape that you no longer have any control over. For example, if you back up your data to the JLUbox, there will also be backups made at the IT Service Centre (HRZ). It is then difficult for you to comply with a possible request for deletion of the data. So please encrypt such legally sensitive data before storing it at a decentralized storage location. To do this, you can either create a zip folder that you password-protect, or you can use the VeraCrypt or Rohos MiniDrive tools. (Which restrictions by data protection laws do I have to consider?).

Where can I archive my data on a long-term basis?

According to good scientific practice, research data should be stored for a minimum of 10 years. In order to do that, there are several discipline-specific and generalist repositories. (How can I find a suitable repository?)

Keep in mind that uploading your data into a repository is not the same as a publishing it. For example, you can define a period of time during which a data package is not yet accessible, but the metadata is already visible. Such embargo periods can be extended by a curator. For further information on ‘embargos’ see: Embargo (Ger). If you decide to publish your data, data access and editing rights can also be regulated in contracts or licenses. (Can I control the use of my data? / Which license should I choose?)

Always note the respective requirements of research funders and publishers and data protection regulations. (Who can decide on whether to share or to publish data? / Which restrictions by data protection laws do I have to consider?)

Publishing and Sharing Research Data

Why should I publish my data?

There are personal as well as scientific benefits to publishing your data. Firstly, published data is citable as independent scientific work, which increases the visibility of your own research. As studies have shown, publications will be quoted more often if the underlying data has been published (see Piwowar / Vision 2013).

Secondly, data sharing enables you to re-use already existing data. Therefore, new types of research questions can be investigated, without having to duplicate work or adding unnecessary costs.

Is there any reason against publishing?

|

There are some situations in which you should only publish your data under specific conditions or not at all. The most important precondition for a publication is that you have the necessary rights to do so. (Who can decide on whether to share or to publish data? / Do I own the copyyright to my data?) |

Moreover, your data could be confidential, personal data that can only be published anonymizedly or with consent of the persons affected. (Which restrictions by data protection laws do I have to consider?)

If you decide to publish with a publisher, make sure to choose the publisher carefully and to not fall for predatory publishing. This short presentation by Werner Dees (Ger) will give you a brief overview on how to recognize predatory publishers.

Which restrictions by data protection laws do I have to consider?

|

Personal data “means any information relating to an identified or identifiable natural person (data subject); an identifiable natural person is one who can be identified, directly or indirectly, in particular by reference to an identifier such as a name, an identification number, location data; an online identifier or to one or more factors specific to the physical, physiological, genetic, mental, economic, cultural or social identity of that natural person;” (§ 46 Abs. 1 BDSG (= General Data Protection Regulation (GDPR)). There are strict requirements for collecting, using and passing on personal data. Information that can be linked to an identified or identifiable person has to be deleted from your research data before archiving, providing and publishing it. Depending on the kind of data, there are several ways of anonymizing data. |

|

If you want to process personal data, you usually need the consent of the persons affected. The aim of your research has to be clearly defined and the persons affected have to be able to estimate the consequences.

Moreover, research data such as company data can contain confidential information (protection of undisclosed know-how and trade secrets). Additionally, non-disclosure agreements might prohibit a data publication.

Who can decide on whether to share or publish data?

|

Possible rights owners or co-owners to the data are researchers, employers, customers, research funders and/or (commercial) contractors. Contracts determine who has a say in sharing or publishing research data. Usually, the results of instruction-bound research are the property of the employer/funder. However, in case of private research, researchers can decide on how to use their data on their own. |

Do I own the copyright to my data?

Research objects (some research data, too) can be protected by the copyright act as creative works. This includes literary works, computer programs, musical works, pantomimes (including choreographic works), works of the fine arts (including architecture and applied arts), photographic works, cinematographic works, and scientific and technical representations.

Usually, research data lacks the necessary threshold of originality, which is why they are not creative works. Nevertheless, there are some exceptions, such as data protected by ancillary copyright, e.g. photographs, moving pictures or sound carriers.

But often research data is protected by copyright as part of a databank or by the ancillary copyright for databanks.

Research data not protected by property rights can normally be used by anyone for any purpose without permission or obligation to pay for it.

Can I control the use of my data?

If you own the copyright or ancillary copyright to your data, you can contractually stipulate several aspects of using your data, such as how to use it, who uses it, the period and purpose of use etc. Since individual case regulations by contract are very complex, there are several solutions for standardizing regulations on rights of use. E.g. Leibniz Institute for Psychology Information (ZPID) offers standardized contracts for using data that has been gained in psychological research. Another example are GESIS user contracts (access restrictions for particularly sensitive social science data). If your data is not to be subject to any specific access or use restrictions, it is advisable to use standardized licenses such as Creative Commons or Open Data Commons. (Which license should I choose?)

Which license should I choose?

Publishing data under a specific license allows you to specify how your data can be used in detail. This creates legal certainty for both data provider and data user. Therefore, in case of no restrictions, it is important to document this waiver clearly.

Although data are usually not subject to copyright law, you should nevertheless treat them as potentially worth protecting. Therefore, there are various license models. The most popular one is Creative Commons . CC-licenses are independent of the licensed content and cover copyright, ancillary copyright and - if existent - sui generis database rights.

The Open Knowledge Foundation’s “Open Data Commons“ license package has been specifically created for publishing data. Apart from the unconditional license (Open Data Commons Public Domain Dedication and License (PDDL)), it offers three other packages:

- Open Data Commons Attribution License (ODC BY v1.0): Condition of attribution

- Open Data Commons Open Database License (ODbL v1.0): Condition of Share-Alike

- Database Contents License (DbCL v1.0): Condition of Share-Alike, including database contents

Regardless of its legal liability, the CC-BY license best fulfills the idea of Open Access and Open Science, whereas “Share-Alike“ can lead to compatibility issues with other licenses, and the prohibition of processing can lead to restrictions on use (e.g. data mining, issues regarding long term storage). Prohibiting commercial use will complicate using commercial databases, which reduces the potential visibility of your research.

Whichever license you choose – choose wisely. An in depth analysis on legal issues can be found here: Andreas Wiebe & Lucie Guibault (2013). Frank Waldschmidt-Dietz’ presentation (Ger) and video on Open Educational Resources (OER) will give you further information on Creative Common licenses, licensing in general and the benefits of Open Access licenses for education.

Regardless of the terms of use, of course you have to meet the rules of good scientific practice, which require citing your sources.

How can I publish my data?

|

You can choose in between publishing your data in interdisciplinary or discipline-specific data repositories. Choosing a discipline-specific data repository is usually the most convenient way, since demand for your data is most likely to be created within your discipline. Additionally, discipline-specific repositories are most likely to meet your discipline’s standards and metadata schema. Furthermore, publishing your data in a discipline-specific repository will increase your research’s visibility. Repositories such as EU-sponsored Zenodo, Dryad oder Figshare are interdisciplinary repositories. You can find a comparison in between them here (Ger). Moreover, data can be published in data supplements of scientific journals. Although this way of publishing data is becoming increasingly important, additional archiving methods should be used to ensure long-term data availability. |

|

How can I find a suitable repository?

This set of questions can help you decide which repository to choose:

|

|

In order to find a suitable repository, you can use the Registry of Research Data Repositories (re3data.org). Re3data is a web-based directory in which repositories are made accessible. You can simply search for a suitable repository. Numerous filters allow you to narrow down your search, e.g. by subject area or data type.

What do I have to consider when uploading data into a repository?

- File format:

It is important to use the right file format. Some repositories have strict requirements on which format to use, while others only make recommendations or are open to all formats. Therefore, you should decide on the right format at an early stage of your research process (How do I create a good data management plan?). General information and specific links on file formats can be found here: Which file formats should I choose?

- Metadata:

Metadata has to be documented precisely in order to make your data traceable and usable. (What are Metadata, Metadata Schemes, Controlled Vocabularies and Documentations)

- Publication:

Uploading data into a repository does not equal instant publication. There might be reasons for an embargo period or a partial publication only. Embargos are especially common in business-related academic fields. Thus, you have to consider possible reasons accounting against an immediate publication (Is there any reason against publishing?).

- Conditions:

Contemplate the conditions you want to publish your data under. There are different types of licensing models to choose from (Which license should I choose?).

What are Metadata, Metadata Schemes and Documentations?

Metadata is data of other data or resources – in this case, of research data. It describes research data in order to

- optimize data findability,

- ensure that the data can be understood by subsequent users,

- enable linking similar research data using the same standardized metadata schema.

The most basic information includes Title, Author/Main Researcher, Institution, Persistent Identifier, Location & Time, Topic, Rights, File Name, Format etc.

Metadata schemata (i.e. metadata standards) are compilations of categories describing data. There are interdisciplinary / independent as well as discipline-specific / dependent standards. Metadata schemata are to ensure that every researcher uses the same vocabulary to describe their data, and thus to guarantee the interoperability and comparability of data sets.

The table below will give you an overview on exemplary metadata standards for several disciplines. If your specific discipline is not listed, you can have a look at the Digital Curation Center's list on disciplinary metadata.

|

Discipline |

Metadata Standard |

|---|---|

|

Interdisciplinary standards |

DataCite Schema, DublinCore, MARC21 (Ger), RADAR |

|

Humanities |

|

|

Earth sciences |

|

|

Climate science |

|

|

Arts & cultural studies |

|

|

Natural sciences |

CIF, CSMD, Darwin Core, EML, ICAT Schema |

|

X-ray, neutron and muon research |

|

|

Social and economic sciences |

Before starting to document your data, you should search for existing metadata schemata. This will improve the interoperability of the research data to be created with already existing data, and it will save you the effort of developing your own metadata schema. If there is no existing metadata standard to provide the description categories necessary for your research, it is still worth using a renowned, already existing subject-specific standard as a basis to build on, e.g. by including additional categories and informing those responsible for the standard so that they can extend the schema. Metadata standards are living entities that can be adapted and enriched with new categories according to the needs of researchers. Be careful not to make any changes to existing elements or attributes, so as not to jeopardize interoperability.

It is possible, usually even necessary, to use several metadata schemata. You should always use at least one subject-independent metadata schema (preferably Dublin Core) to describe your data, because this way you can cover the general description categories as mentioned in the first paragraph of this section. Fig. 4 depicts a metadata section using Dublin Core Standard. Subject-specific metadata standards, on the other hand, allow you to structure your data using descriptive strategies, which are more based on content and may differ from discipline to discipline.

Fig. 4: Example of metadata in the Dublin Core Standard

Consequently, metadata determine which information is to be presented. To get the best possible results when searching for and using data, this information has to be presented using a uniform vocabulary. In order to do so, there are several discipline-specific and interdisciplinary controlled vocabularies, like thesauri, classifications and authority controls.

|

Types of controlled vocabularies |

Names |

|---|---|

|

Unambiguous identification of persons, items or places |

The Integrated Authority File (GND) GeoNames / International Standard Name Identifier (ISNI, ISO 27729) / |

|

General, interdisciplinary classification systems |

Dewey Decimal Classification (DDC) / |

|

Disciplinary classification systems |

Social Sciences (Ger) |

|

Disciplinary vocabularies |

Agricultural Information Management Standard (AGROVOC) Thesaurus for the Social Sciences (TheSoz) Thesaurus Technology and Management (Ger) (TEMA)

|

You can find an overview on different systems with Basel Register of Thesauri, Ontologies & Classifications (BARTOC) and Taxonomy Warehouse.

A documentation is usually more than a mere metadata description. It is a more profound (subject-specific) indexing, in the context of which e.g. variables, instruments, methods etc. are described in detail and thus the origin of the data becomes apparent. Often such a description is essential to understand, verify and use the data.

The JISC Guide on Metadata and the University of Edinburgh’s interactive Mantra Course on Documentation, Metadata, Citation will offer you further introductory information on metadata.

What are Persistent Identifiers?

|

A Persistent Identifier (PID) is a code uniquely identifying a digital resource. This code is a unique, unchangeable name with which a permanent link to the digital resource can be created. Because of the unique reference, the linked content becomes citable. (How to cite research data?) It does not matter, which kind of resource it is – might it be a research record, journal article, video or other kinds of resources. The Persistent Identifier ensures that the resource remains retrievable if, e.g. the internet address of the server changes. Therefore, Persistent Identifiers are key to long-term storage and availability of research data. There are different kinds of PIDs. An increasingly common one is the Digital Object Identifier (DOI). An example for a DOI is: 10.5282/o-bib/2018H2S14-27. Worldwide, this DOI is only assigned once. If “https://www.doi.org/” is added in front of the DOI, this will create a link to an o-bib.de article (see https://www.doi.org/10.5282/o-bib/2018H2S14-27). Another common identifier for online publications, apart from DOI, is Uniform Resource Name (URN). |

|

Reputable publication platforms, such as Zenodo and Figshare, automatically reserve a DOI for your data set, if you publish your data. In case you publish in a different, discipline-specific repository, you should make sure that this repository also offers DOIs or another kind of PID. (How can I publish my data? / How can I find a suitable data repository?)

Finding and Using Research Data

Where can I find research data?

Not only due to requirements and recommendations by funders, publishers and institutions to make data accessible, research data is increasingly available for reuse. To find suitable research data for your own research, you should first have a look at relevant offers originating from your own discipline. There can be institutional or specialized repositories as well as Data Journals (List). Repositories assorted by discipline can be found here: re3data.org

Furthermore, you can do research using generic search engines. However, to their disadvantage, they often cannot depict the detailed metadata schemata of their sources adequately. Moreover, the respective metadata differ greatly to what makes them identifiable – single data, data sets or data collections.

|

|

Three of the most common search engines are: Metadata found in repositories and databases is obtained via OAI-PMH. You can find research data by searching for document type “Dataset“. Searches metadata by using different sources, such as CLARIN or Global GBIF. Searches entity metadata, e.g. research data (Resource Type “Dataset“) registered at DataCite using DOIs. This metadata is partly also searched by the other two services. |

When reusing data, the respective rights (licenses, license agreements) are binding. They can i.a. determine who can use the data, for which purpose and for which period of time.

If you cannot or do not want to use already existing research data, of course you can collect data using reputable research practices. For example Dr. Samuel de Haas’ and Jan Thomas Schäfer’s Coffee Lecture (Ger) will give you information on how to collect data using web scraping and text mining and on how to handle big data.

How do I cite research data?

You have to cite your data correctly to meet good scientific practice and make research data usable and reusable.

|

Citing external data appreciates the scientific achievement of its “author“. As with citing other publications, conventions for citing data may formally differ. However, with regard to their content, they have to be uniquely identifiable as well. The FORCE11 Data Citation Synthesis Group has developed Recommendations for Citing Data. According to these recommendations, a full data citation includes: |

|

Author(s), Year, Dataset Title, Data Repository or Archive, Version, Global Persistent Identifier.

Further, possibly useful, optional additions are Edition, URI, Resource Type, Publisher, Unique Numeric Fingerprint (UNF) and Location (see Alex Ball & Monica Duke 2015: How to Cite Datasets and Link to Publications).