Thesis topics

Currently, we are offering 48 Bachelor and 25 Master theses. Interested Master candidates should contact the potential supervisor until May 28.

If you are interested in completing a Master thesis in Experimental Psychology but have not decided on a topic yet, or if you have questions about one of the research areas below, please get in contact.

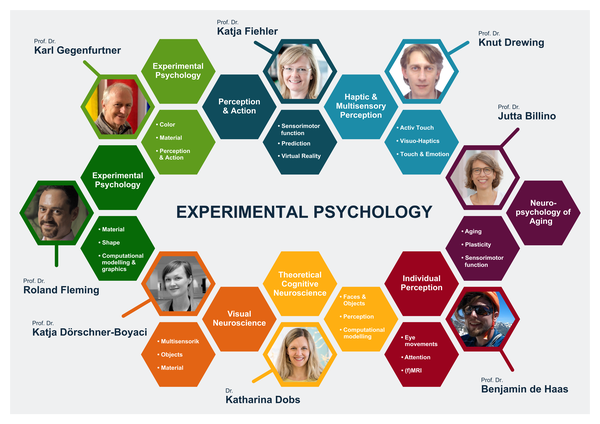

Theses can be completed in the following areas:

- Lifespan neuropsychology of perception and sensorimotor control (Jutta Billino)

- Behavioral signatures of face and object recognition (Katharina Dobs)

- Perception of material qualities (Katja Dörschner)

- Haptic Perception and Visual-Haptic Interactions (Knut Drewing)

- Perceiving and Acting in Virtual Environments (Katja Fiehler)

- Sensorimotor predictions and control (Katja Fiehler)

- Action, perception and psychosis (Bianca van Kemenade and Katja Fiehler)

- Shape and material perception (Roland Fleming)

- Distribution of attention and gaze while gaming (Karl Gegenfurtner and Anna Schröger)

- Control of eye movements (Karl Gegenfurtner and Alexander Goettker)

- Color perception in virtual worlds (Karl Gegenfurtner and Raquel Gil)

- Brightness and saturation of objects (Karl Gegenfurtner and Laysa Hedjar)

- Individual differences in visual perception (Ben de Haas)

Lifespan neuropsychology of perception and sensorimotor control (Jutta Billino)

|

Variability in individual performance provides a window to plasticity in human perception and behavior. We investigate how sensory, motor, cognitive, and motivational processes are interwoven and how their interplay varies across individuals. Research topics include:

If you are interested in these topics just send an email so that we can discuss the current opportunities to get involved in our research: jutta.billino@psy.jlug.de |

Representative Papers:

- Klever, L., Mamassian, P., & Billino, J. (2022). Age‑related differences in visual confidence are driven by individual differences in cognitive control capacities. Scientific Reports, 12, 6016. doi:10.1038/s41598-022-09939-7

- Hesse, C., Koroknai, L., & Billino, J. (2020). Individual differences in processing resources modulate bimanual interference in pointing. Psychological Research, 84, 440–453. doi: https://doi.org/10.1007/s00426-018-1050-3

- Klever, L., Voudouris, D., Fiehler, K., & Billino, J. (2019). Age effects on sensorimotor predictions: What drives increased tactile suppression during reaching? Journal of Vision, 19(9):9, 1-17. doi: https://doi.org/10.1167/19.9.9

- Billino, J., Hennig, J., & Gegenfurtner, K. (2016). The role of dopamine in anticipatory pursuit eye movements: Insights from genetic polymorphisms in healthy adults. eNeuro, 3(6). doi: https://doi.org/10.1523/ENEURO.0190-16.2016

Behavioral signatures of face and object recognition (Katharina Dobs)

From a brief glimpse of a complex scene, we recognize people and objects, their relationships to each other, and the overall gist of the scene – all within a few hundred milliseconds and with no apparent effort. What are the computations underlying this remarkable ability and how are they implemented in the brain? To address these questions, our lab bridges recent advances in machine learning with human behavioral and neural data to provide a computationally precise account of how visual recognition works in humans.

Faces are a ‘special’ case of visual stimuli. Numerous behavioral and neural studies of face perception have revealed several hallmarks of face perception like the face inversion effect, other-race effect or familiarity effect, while neural studies have identified and characterized a network of face-selective areas in the brain. Moreover, we detect faces faster than other visual objects, and we even see faces in things (known as ‘pareidolia’). Despite this wealth of empirical data, most theories of face perception still rely on vague word models (e.g., ‘holistic processing’), and cannot explain how the brain transforms sensory representations of a face image into an abstract representation of that face.

Here we will use behavioral measures, such as response time, face detection and recognition accuracy and eye tracking to address questions such as:

- What information do we convey when we move our faces? Is there more than just facial expression information?

- Do we process faces in things quantitatively similar to real faces?

- Is the face inversion effect unique to faces, or can we also find it for other types of objects?

In these projects, you will learn more about face perception and visual recognition in general, how to set up behavioral or eye tracking experiments and how to analyze the outcome. Further, if you like, we can teach you more about deep neural networks as models for visual perception.

If you are interested or have any questions, please send an email to katharina.dobs@psychol.uni-giessen.de

[If you would like to complete your thesis with Dr. Dobs, please indicate "Karl Gegenfurtner" on the registration sheet - and we will assign you]

Corresponding video from Dr. Katharina Dobs

Representative Papers:

-

Dobs, K., Isik, L., Pantazis, D., & Kanwisher, N. (2019). How face perception unfolds over time. Nature Communications, 10(1), 1258.

-

Dobs, K., Martinez, J., Kell, A., & Kanwisher, N. (2022). Brain-like functional specialization emerges spontaneously in deep neural networks. Science Advances, 8, eabl8913.

-

Dobs, K., Yuan, J., Martinez, J., & Kanwisher, N. (2023). Behavioral signatures of face perception emerge in deep neural networks optimized for face recognition. PNAS, 120(32), e2220642120.

-

Kanwisher, N., Khosla, M., & Dobs, K. (2023). Using artificial neural networks to ask 'why' questions of minds and brains. Trends in Neurosciences, 46, 72-88.

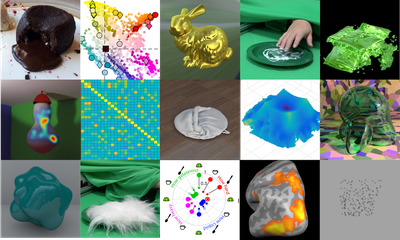

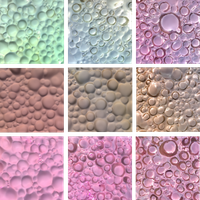

Perception of material qualities (Katja Dörschner)

Our visual experience of the world is as much defined by the material qualities of objects as it is by their shape properties: keys look shiny, a tree trunk looks rough, and chocolate soufflé looks airy. Humans can easily and nearly instantaneously identify shapes and properties of materials and adjust their actions accordingly. Yet how the perceptual system accomplishes this is largely unknown. Using a combination of psychophysics, image analysis, computational modeling, eye -/ hand tracking, and fMRI we investigate questions such as: What information does the brain use to estimate and categorise material qualities? How do expectations about materials affect how we perceive them, look at them or interact with them? Does freely interacting with a material change how it feels or looks?

If you are interested, just send an email to Katja.Doerschner@psychol.uni-giessen.de.

Current projects

- Power of dynamic contours in material perception

A line drawing image represents an object by lines/contours but lacks color, texture, and optical information. Studies have shown that people are very efficient at recognizing objects and scenes from static line drawing images despite them being the highly reduced versions of full textured images (Biederman & Ju, 1988; Walther et al., 2011).

Figure 1: Full textured and line drawing versions of same images

In our first set of experiments, we aimed to investigate how observers perceive material qualities from line drawings. Work on line drawings to date has focused on static images. However, we live in a dynamic environment and motion plays an important role especially in the perception of mechanical material properties. So, we introduced dynamic version of line drawings which depict the material motion by moving contours as shown in Figure 2. From our first phase of experiments, we established that dynamic line drawings vividly convey mechanical material properties implying the importance of contour motion in material perception.

Figure 2: Dynamic line drawing depicting material motion by contours.

Next question we aim to address is how does the visual system extract and integrate meaningful structure from the moving contours? To address this, we will be conducting a set of experiments presenting either single or short sequences of frames to investigate at what time scale the information extracted from contours is integrated to estimate material properties.

- Can material motion be perceived from dynamic dot materials without local image motion?

Previous research shows that mechanical properties of material can be conveyed by image motion alone (Bi et al., 2018; Schmid & Doerschner, 2018). These studies used dynamic dot materials where dots are embedded in or on the surface of materials (Figure 03). Such displays feature both global shape changes and local image motion.

Figure 3: Dynamic dot stimuli representing soft fabric

In this study, we seek to understand the individual roles of local image motion and shape cues in the perception of material properties. Specifically, we ask whether the perception of material motion requires motion detected at the level of individual points or if it is sufficient to detect changes at the global structure level, such as changes in the overall shape of the material. We hypothesize that if local motion plays a crucial role in the perception of material motion, then disrupting local motion cues in dynamic dot displays will impair the ability of observers to recognize materials in these displays.

Representative Papers:

- Schmid, A.C., Barla, P. & Doerschner, K. (2020). Material category determined by specular reflection structure mediates the processing of image features for perceived gloss. bioRxiv, doi: https://doi.org/10.1101/2019.12.31.892083

- Schmid, A.C., Boyaci, H. & Doerschner, K. (2020). Dynamic dot displays reveal material motion network in the human brain. bioRxiv, doi: https://doi.org/10.1101/2020.03.09.983593

- Alley, L.M., Schmid, A.C. & Doerschner, K. (2020). Expectations affect the perception of material properties. bioRxiv, doi:https://doi.org/10.1101/744458.

- Schmid, A.C. & Doerschner, K. (2019). Representing stuff in the human brain. Cur. Op. Beh. Sci., 30, pp.178-185.

- Toscani, M., Yucel, E. & Doerschner, K. (2019). Gloss and speed judgements yield different fine tuning of saccadic sampling in dynamic scenes. i-Perception https://doi.org/10.1177/2041669519889070

- Ennis, R. & Doerschner, K. (2019) The color appearance of three-dimensional, curved, transparent objects.. doi: https://doi.org/10.1101/2019.12.30.891341

- Cavdan, M., Doerschner, K. & Drewing, K. (2019). The many dimensions underlying perceived softness: How exploratory procedures are influences by material and the perceptual task. IEEE World Haptic Conference. doi:https://doi.org/10.1109/WHC.2019.8816088

- Schmid, A., Doerschner, K. (2018). The contribution of optical and mechanical properties to the perception of soft and hard breaking materials. Journal of Vision, 18(1):14, 1-32.

Haptic Perception and Visual-Haptic Interactions (Knut Drewing)

|

Haptic perception is an active process in which people gather information in a targeted manner. For example, if we want to feel how soft an object is, we first have to explore it in a suitable way so that the sensors pick up relevant information. We investigate the mechanisms of control of the movement-perception control loop in human active touch. In doing so, we start from the hypothesis that people perform their sensory movements "optimally". Further focal points of investigation are the perception of softness, emotional-motivational aspects of haptic perception, perception of time and the interplay of feeling and seeing – for example on the basis of the "size-weight illusion". If you are interested or have any questions, please send an email to knut.drewing@psychol.uni-giessen.de |

|

Corresponding video from Prof. Dr. Knut Drewing

Representative Papers:

- Cavdan, M., Doerschner, K., & Drewing, K. (2019). The Many Dimensions Underlying Perceived Softness: How Exploratory Procedures are Influenced by Material and the Perceptual Task. IEEE World Haptics Conference, WHC 2019 (pp. 437-442), IEEE.

- Drewing, K., Weyel, C., Celebi, H., & Kaya, D. (2018). Systematic Relations between Affective and Sensory Material Dimensions in Touch. IEEE Transactions on Haptics 11(4), 611-622.

- Kaim, L. & Drewing, K. (2011). Exploratory strategies in haptic softness discrimination are tuned to achieve high levels of task performance. IEEE Transactions on Haptics, 4. 242-252.

- Wolf, C. Bergmann Tiest, W., & Drewing, K. (2018) A mass-density model can account for the size-weight illusion. PLOS ONE 13(2): e0190624

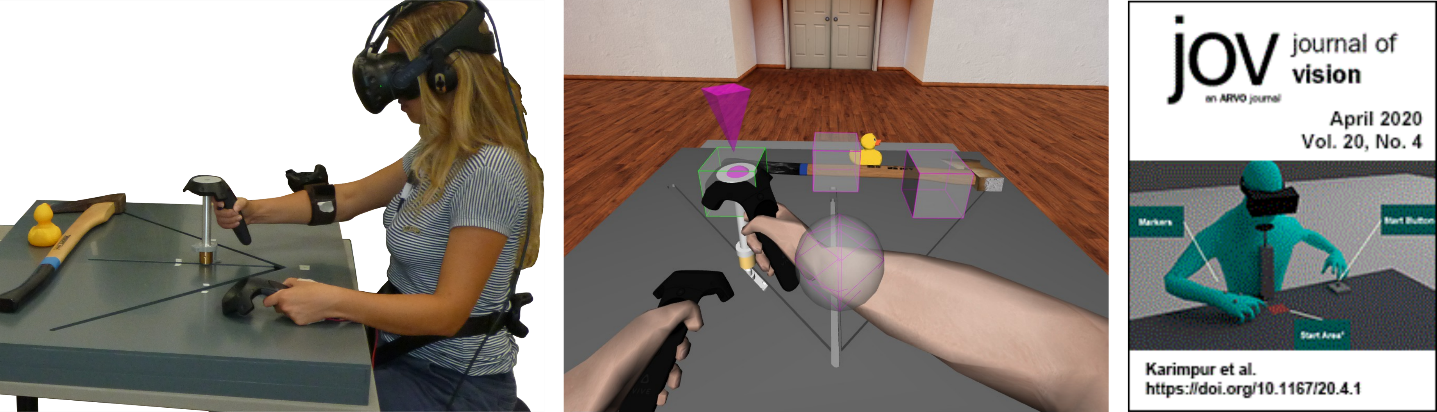

Perceiving and Acting in Virtual Environments (Katja Fiehler)

The information we perceive through our senses enable us to perform goal-directed actions. This involves the integration of our bodily senses, of objects in our environment and of information about the space we are acting in. In such a way, the actions that we perform do not only allow us to manipulate our external world, but also inform us about ourselves by providing sensory feedback. In other words: The relation between perception and action is not unidirectional, and our external world can likewise influence our perception and behaviour.

The link between perception and action has often been tested in quite abstract or limited environments. However, virtual reality (VR) as a tool allows us to create three-dimensional realistic looking environments to perform our studies in a highly controllable manner. Our research group offers different topics related to perception and action in VR. Possible research questions you could investigate with us are:

- How do humans localize objects in 3D space?

- How do object and scene semantics influence object localization for actions, such as when looking for our keys in the living room?

- How do humans learn statistical regularities in the world and adjust their behavior to it, for example, when and where an obstacle occurs?

Check the individual websites of our team members (https://www.uni-giessen.de/fbz/fb06/psychologie/abt/allgemeine-psychologie/wh/Team) for more information on our research! Feel free to contact any of us if you are interested in a project or if you have any questions.

If you are interested or have any questions, please send an email to Katja.Fiehler@psychol.uni-giessen.de

Corresponding video from Prof. Dr. Katja Fiehler

Representative Papers:

- Karimpur, H., Eftekharifar, S., Troje, N., & Fiehler, K. (2020). Spatial coding for memory-guided reaching in visual and pictorial spaces. Journal of Vision, 20(4): 1, 1-12.

- Karimpur, H., Morgenstern, Y., & Fiehler, K. (2019) Facilitation of allocentric coding by virtue of object-semantics. Scientific Reports, 9, 6263.

- Klinghammer, M., Schütz, I., Blohm, G., & Fiehler, K. (2016). Allocentric information is used for memory-guided reaching in depth: a virtual reality study. Vision Research, 129, 13-24.

Sensorimotor predictions and control (Katja Fiehler)

Performing complex movements is a remarkably difficult task. First, humans make predictions about what will happen in the near future, which facilitates movement planning. Second, they use sensory feedback to correct any movement errors. Third, the human nervous system needs to deal with the abundance of sensory information when acting in a complex world. Interestingly, when predictions about future events are accurate, the associated sensory feedback is suppressed. This is evidenced by our inability to tickle ourselves. Like tactile sensations during self-tickling, auditory and visual sensations arising from our actions are also suppressed.

Our aim is to understand (1) the relationship between predictive and sensory feedback signals during goal-directed movements, and (2) how the processing of sensory information is shaped by predictive mechanisms and by the performed action itself. Possible research questions you could investigate with us are:

- How do sensorimotor predictions change tactile perception?

- How do sensorimotor predictions change across the lifespan?

- How do task demands influence sensorimotor predictions?

You will work closely with your advisor as part of an internationally vibrant group. During your project you will get the opportunity to learn how to program behavioural experiments, record arm movements and analyse behavioural and psychophysical data.

If you are interested or have any questions, please send an email to Katja.Fiehler@psychol.uni-giessen.de

Corresponding video from Prof. Dr. Katja Fiehler

Representative Papers:

- McManus, M., Schütz, I., Voudouris, D., & Fiehler, K. (2023). How visuomotor predictability and task demands affect tactile sensitivity on a moving limb during object interaction. Royal Society Open Science, 10(12), 231259.

- Fuehrer, E., Voudouris, D., Lezkan, A., Drewing, K., & Fiehler, K. (2022). Tactile suppression stems from specific sensorimotor predictions. PNAS, 119(20), e2118445119.

- Voudouris, D. & Fiehler, K. (2022). The role of grasping demands on tactile suppression. Human Movement Science, 83, 102957.

Action, perception and psychosis (Bianca van Kemenade and Katja Fiehler)

Whenever we make a movement, we generate sensory stimuli. For example, when we knock on a door, we generate both a sound and tactile input to our fingers. In a world full of sensory information, it is crucial to understand which stimuli were the consequence of our own action, and which stimuli came from the environment. The importance of this ability is illustrated by cases in which this goes wrong, such as in patients with schizophrenia who feel that their own thoughts and actions are externally controlled.

Research has shown that we make predictions about the sensory consequences of our actions, which helps us to control our movements. Furthermore, these predictions seem to affect our perception, often leading to suppression of self-generated stimuli (explaining why we can’t tickle ourselves), though sometimes action can also enhance our perception. It is thought that these predictions also help us to distinguish self-generated from externally generated stimuli.

Our aim is to understand 1) how the brain creates predictions about sensory action outcomes, 2) why action sometimes suppresses and sometimes enhances our perception, and 3) which part of these processes is altered in patients with schizophrenia, and potentially also already in healthy participants with subclinical symptoms. We perform behavioural and fMRI experiments to answer these questions.

Note: potential Master theses will focus on healthy participants, and can include either behavioural or fMRI experiments.

If you are interested or have any questions, please send an email to bianca.van-kemenade@psychiat.med.uni-giessen.de

[If you would like to complete your thesis with Prof. van Kemenade, please indicate "Katja Fiehler" on the registration sheet - and we will assign you]

Corresponding video from Prof. Dr. Bianca van Kemenade

Representative Papers:

- Lubinus, C., Einhäuser, W., Schiller, F., Kircher, T., Straube, B.*, van Kemenade, B.M.* (2022). Action-based predictions affect visual perception, neural processing, and pupil size, regardless of temporal predictability. NeuroImage, 263(119601):1-13

- Uhlmann, L., Pazen, M., van Kemenade, B.M., Kircher, T., & Straube, B. (2021). Neural correlates of self-other distinction in patients with schizophrenia spectrum disorders: The roles of agency and hand identity. Schizophrenia Bulletin, 47(5):1399–1408

- Van Kemenade, B.M., Arikan, B.E., Podranski, K., Steinsträter, O., Kircher, T., & Straube, B (2019). Distinct roles for the cerebellum, angular gyrus and middle temporal gyrus in action-feedback monitoring. Cerebral Cortex, 29(4), 1520–153

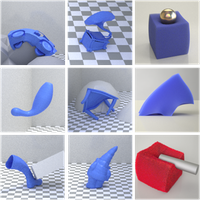

Shape and material perception (Roland Fleming)

How does the brain work out the physical properties of objects and how to guide our actions towards these objects? One important source of information about what objects are made out of – and how they respond to forces – is their shape. For example, soft materials rarely have hard corners, and rubbery materials can be bent. Shiny objects do look more or less glossy depending on their surface geometry. And where we grasp an object will depend strongly on its shape.

We are trying to understand how 3D shape and material properties interact, and how both affect behavior. The proposed projects use a combination of either (i) computer graphics and perception experiments to work out how the brain estimates the properties of objects based on shape; or (ii) real objects and grasping experiments to work out how estimations of object properties guide our actions. The thesis can be written in German or English. Possible research topics include:

- How do we work out material properties like softness or glossiness from object shape and object motion across many different simulated objects? What shape features or other sources of information is the visual system using when estimating these properties?

- How do we integrate shape and material of objects to guide our actions? How do we figure out the optimal way to grasp and lift different objects? How do we decide where to place our fingers?

If you are interested or have any questions, please send an email to Roland.W.Fleming@psychol.uni-giessen.de.

Corresponding video from Prof. Dr. Roland Fleming

Representative Papers:

- Klein, L.*, Maiello, G.*, Paulun, V. C., & Fleming R. W. (2020). Predicting precision grip grasp locations on three-dimensional objects. PLoS Computational Biology, 16(8): e1008081. [* equal authorship]

- Phillips, F.*, & Fleming, R. W.* (2020). The Veiled Virgin illustrates visual segmentation of shape by cause. Proceedings of the National Academy of Sciences, 201917565. [* equal authorship]

- Van Assen, J. J., Nishida, S., & Fleming, R. W. (2020). Visual perception of liquids: Insights from deep neural networks. PLoS Computational Biology, 16(8): e1008018.

- Fleming, R. W., & Storrs, K. R. (2019). Learning to See Stuff. Current Opinion in Behavioral Sciences, 30: 100–108.

- Schmidt, F., Phillips, F., & Fleming, R. W. (2019). Visual perception of shape-transforming processes: ‘Shape Scission’. Cognition, 189, 167-180.

- Van Assen, J. J., Barla, P., & Fleming, R. W. (2018). Visual Features in the Perception of Liquids. Current Biology, 28(3), 452–458.

- Fleming, R. W. (2017). Material Perception. Annual Reviews of Vision Science, 3(1). 365-388.

- Schmidt, F., & Fleming, R. W. (2016). Visual perception of complex shape-transforming processes. Cognitive Psychology, 90: 48–70.

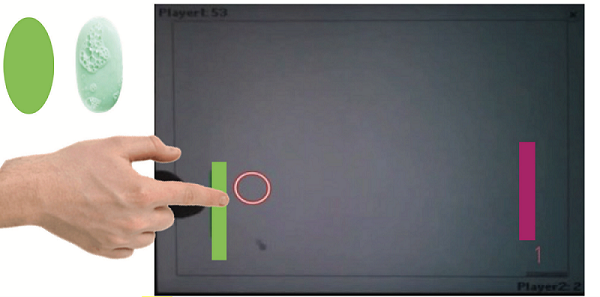

Distribution of attention and gaze while playing on an iPad (Karl Gegenfurtner and Anna Schröger)

When we observe a moving object, such as a ball, we make predictions about its future trajectory. For example, we may predict whether the ball will continue its motion, bounce off a wall, or be caught by a person. These predictions are based on our experience with the environment. However, if the conditions suddenly change (e.g., if the ball starts to spin or darkness limits our vision) our predictions may be inaccurate.

To study these processes, researchers analyze the eye and hand movements of participants looking at or interacting with a moving object. Such studies reveal that participants can follow a ball smoothly with their gaze and make saccades to certain locations where they predict important events to happen (e.g., a bounce of a ball).

In this project, we investigate these anticipatory eye movements to understand how subjects react to unexpected situations and how quickly they adapt to new conditions. We plan to have subjects play a computer game called "Pong" on an iPad while manipulating the properties of the ball, the context, or the interaction of the player with the touchscreen.

If you are interested or have any questions, please send an email to anna.schroeger@psychol.uni-giessen.de.

Representative Papers:

- Chen J, Valsecchi M, Gegenfurtner KR (2016). Role of motor execution in the ocular tracking of self-generated movements. J Neurophysiol, 16(6):2586-2593.

- Fooken, J., Kreyenmeier, P., & Spering, M. (2021). The role of eye movements in manual interception: A mini-review. Vision Research, 183, 81-90.

Control of eye movements (Karl Gegenfurtner and Alexander Goettker)

Our eyes move continuously and provide direct access to the processing of information in our brain: We look at the things that are of interest to us. How fast and how accurate we can look at relevant things depends on many factors. Experience and knowledge can help us find interesting objects faster, additional information allows us to better predict movements, and the expectation of reward can influence and accelerate processes. We want to investigate these mechanisms by measuring the eye movements of individuals during different tasks.

- Comparison of simple and naturalistic stimuli: is following a dot the same as following a puck in an ice hockey game?

- Sequential effects and experience: How do our eye movements change based on our experiences and expectations? Is tracking a snail the same as tracking a racing car moving at the same velocity? How we integrate our experience for the next trial?

Corresponding video from Prof. Dr. Karl Gegenfurtner

Representative Papers:

- Dorr, M., Martinetz, T., Gegenfurtner, K. R., & Barth, E. (2010). Variability of eye movements when viewing dynamic natural scenes. Journal of vision, 10(10), 28-28.

- Kowler, E., Aitkin, C. D., Ross, N. M., Santos, E. M., & Zhao, M. (2014). Davida Teller Award Lecture 2013: The importance of prediction and anticipation in the control of smooth pursuit eye movements. Journal of vision, 14(5), 10-10.

- Schütz, A. C., Trommershäuser, J., & Gegenfurtner, K. R. (2012). Dynamic integration of information about salience and value for saccadic eye movements. Proceedings of the National Academy of Sciences, 109(19), 7547-7552

- Schütz, A. C., Braun, D. I., & Gegenfurtner, K. R. (2011). Eye movements and perception: A selective review. Journal of vision, 11(5), 9-9.

Color perception in virtual worlds (Karl Gegenfurtner and Raquel Gil)

Our perception of color depends not only on the composition of light that is reflected from an object and reaches our eye, but also on the distribution of light throughout the scene. Our visual system has the outstanding property of being able to adapt to the dominant lighting and to assign colors to objects that are invariant - i.e., that depend only on the object itself. Virtual reality provides a way to arbitrarily change object colors, illuminations, and their interactions to find out how our visual system enables color constancy. We use photo-realistic virtual worlds with which the subjects can interact.

During your work, you will learn the basics of color perception and color constancy, become familiar with virtual reality technology, and thoroughly learn psychophysical measurement techniques.

If you are interested or have any questions, please send an email to gegenfurtner@uni-giessen.de

Corresponding video from Prof. Dr. Karl Gegenfurtner

Representative Papers:

- Witzel, C. & Gegenfurtner, K.R. (2018) Color perception: objects, constancy, and categories. Annual Review of Vision Science, 4, 475-499.

Brightness and saturation of objects (Karl Gegenfurtner and Laysa Hedjar)

Despite a high degree of standardization that allows faithful reproduction of the colors of individual pixels in print and on displays, it is not yet known what factors underlie the perception of brightness and saturation of whole objects. We want to investigate this with photographs of objects as well as with objects generated by computer graphics. Subjects will estimate the brightness and saturation of these objects and we will explore the correlation between their perception and properties of the distributions of colors and intensities in the image corresponding to the objects.

During your work you will learn the basics of color perception, create artificial objects by means of computer graphics (e.g. Blender), as well as thoroughly learn psychophysical measurement methods.

If you are interested or have any questions, please send an email to gegenfurtner@uni-giessen.de

Corresponding video from Prof. Dr. Karl Gegenfurtner

Representative Papers:

- Witzel, C. & Gegenfurtner, K.R. (2018) Color perception: objects, constancy, and categories. Annual Review of Vision Science, 4, 475-499.

Individual differences in visual perception (Ben de Haas)

Does the world look the same to everyone? How could we know if visual perception varies from one person to the next?

The functional architecture of the human visual system only resolves those parts of a scene clearly that we directly look at; cluttered objects in the periphery are hard to tell apart. Therefore, visual perception is an active process – we constantly have to move our eyes to fixate visual objects of interest and the visual system needs to identify such fixation targets based on incomplete peripheral information.

Computational ‘salience models’ try to predict which parts of an image will be fixated, based on properties of the image. However, we recently found that fixation targets are not just a function of the image, but also of the individual observer. Observers freely viewing complex scenes systematically differ in the degree to which they are attracted by different types of visual objects. For instance, the eye movements of some people are more attracted to faces within the scenes than are those of others. Similar differences can be seen for the gaze ‘pull’ afforded by text, food, hands and other stimulus types.

e will run follow-up behavioural and eye-tracking experiments to better understand the causes and consequences of these individual differences. For instance, we currently investigate whether individual eye movements predict individual differences in verbal descriptions of complex scenes.

If you are interested or have any questions, please send an email to benjamindehaas@gmail.com

Representative Papers:

- De Haas, B., Iakovidis, A. L., Schwarzkopf, D. S., & Gegenfurtner, K. R. (2019). Individual differences in visual salience vary along semantic dimensions. Proceedings of the National Academy of Sciences, 116(24), 11687-11692.

- Constantino, J. N., Kennon-McGill, S., Weichselbaum, C., Marrus, N., Haider, A., Glowinski, A. L., ... & Jones, W. (2017). Infant viewing of social scenes is under genetic control and is atypical in autism. Nature, 547(7663), 340.

- Kennedy, D. P., D’Onofrio, B. M., Quinn, P. D., Bölte, S., Lichtenstein, P., & Falck-Ytter, T. (2017). Genetic influence on eye movements to complex scenes at short timescales. Current Biology, 27(22), 3554-3560.

- Xu, J., Jiang, M., Wang, S., Kankanhalli, M. S., & Zhao, Q. (2014). Predicting human gaze beyond pixels. Journal of vision, 14(1), 28-28.