When Self Humanization Leads to Algorithm Aversion

What users want from decision support systems on prosocial microlending platforms

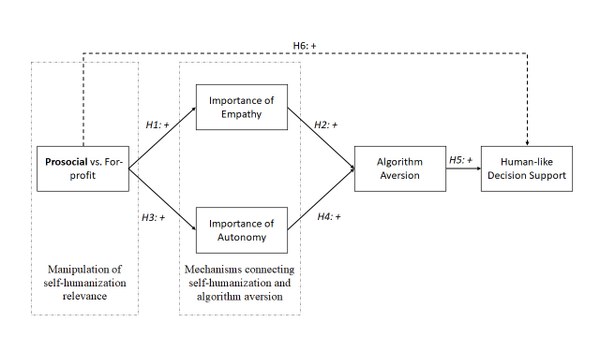

Decision support systems are increasingly being adopted by various digital platforms. However, prior research has shown that certain contexts can lead to algorithm aversion, leading people to reject their decision support. This paper investigates how and why the context in which users are making decisions (for-profit versus prosocial microlending decisions) affects their degree of algorithm aversion and ultimately their preference for more human-like (versus computer-like) decision support systems. We explain that contexts vary in their affordances for self-humanization and propose that people perceive prosocial decisions as more relevant to self-humanization and that they therefore ascribe more importance to empathy and autonomy while making these kinds of decisions. This increased importance of empathy and autonomy leads to a higher degree of algorithm aversion. At the same time, it also leads to a stronger preference for human-like decision support, which could therefore serve as a remedy for such self-humanization-induced algorithm aversion. The results from an online experiment support our theorizing. We discuss both theoretical and design implications, especially for the potential of anthropomorphized conversational agents on platforms for prosocial decision-making.